Real-time Indoor 3D Scene Reconstruction in 2023

This is the 1st blog in the series of blogs we are writing on the topic of "real-time 3D scene reconstruction in 2023". Click here to jump to 2nd blog.

Image by Christel from Pixabay

1. Introduction

Indoor 3D reconstruction involves creating a digital model of an indoor environment. Various techniques can be used to create such models, including structure-from-motion (SfM) and multi-view stereo (MVS) algorithms, LiDAR scanning, photogrammetry, and RGB-D cameras. SfM and MVS algorithms use a set of images taken from different viewpoints to reconstruct a 3D model of an indoor environment, LiDAR scanning uses laser-based technology to scan indoor environments, photogrammetry uses photographs to measure and generate information about physical objects and environments, and lastly, the RGB-D cameras combine a traditional RGB camera with a depth sensor to provide both color and depth data.

This article will review the technologies available to create a 3D scene reconstruction in an indoor environment. Applications for these technologies can range from simply calculating the dimensions of a room to cultural heritage preservation in 3D format.

We will examine various AI-based approaches that reconstruct 3D models by combining the power of a camera with other sensors. We will progress to demonstrate methods of creating a real-time 3D scene reconstruction model using a hybrid approach that relies on input from the camera and other sensors. Finally, we will conclude by comparing the two approaches.

2. Use cases

According to the ChatGPT, there are a variety of applications for indoor 3D reconstruction. These include the following:

Virtual and augmented reality: To create detailed virtual environments for use in virtual and augmented reality applications.

Robotics: To create detailed maps of indoor environments for autonomous navigation and localization applications within robots.

Facility management: To create detailed models of buildings for use in facility management, including space planning and maintenance.

Surveillance and security: To create detailed models of buildings for use in surveillance and security systems, including tracking people and objects.

Gaming: To create detailed virtual environments for video games and simulations.

Real estate and Architecture: To create detailed models of buildings for real estate and architecture, including architectural visualizations and virtual tours.

Heritage preservation: To create detailed models of heritage sites for use in preservation and education.

Interior design: To create detailed models of interior spaces for use in interior design, including space planning and visualization.

3. State-of-the-art Approaches

This section will present some examples of the technologies currently being used in different domains.

3.1. AI

AI-based (namely, SfM and MVS) technologies rely on input from a camera to create a 3D model by predicting the depth map on a frame-by-frame basis.

The advantage of these techniques is that the data can be collected using a mobile camera; as such, it is not time intensive. However, the creation of a 3D model requires a dedicated GPU-enabled device and takes a longer time to process. A further drawback of AI-based techniques is that the results largely depend on the amount of data collected and the computational power available.

Various mobile applications have been developed that allow users to capture and upload data to the provider’s servers 1, 2. Once the process is complete, the provider sends the model back to the user.

Here is an example of a 3D model generated from video captured on a mobile camera (without LIDAR) using a state-of-the-art approach 3. The processing took around 3 hours for 2 minutes of video, on a CUDA-enabled system 6.

3.2. Hybrid

The hybrid approach primarily relies on sensor data to create a 3D model of a space. The hybrid method can be combined with neural networks to enhance the quality of the output.

Hybrid techniques are universally applicable when the accuracy of the final model is of the utmost concern. However, similar to the AI-based approach, they require significant computational power to process a large amount of data.

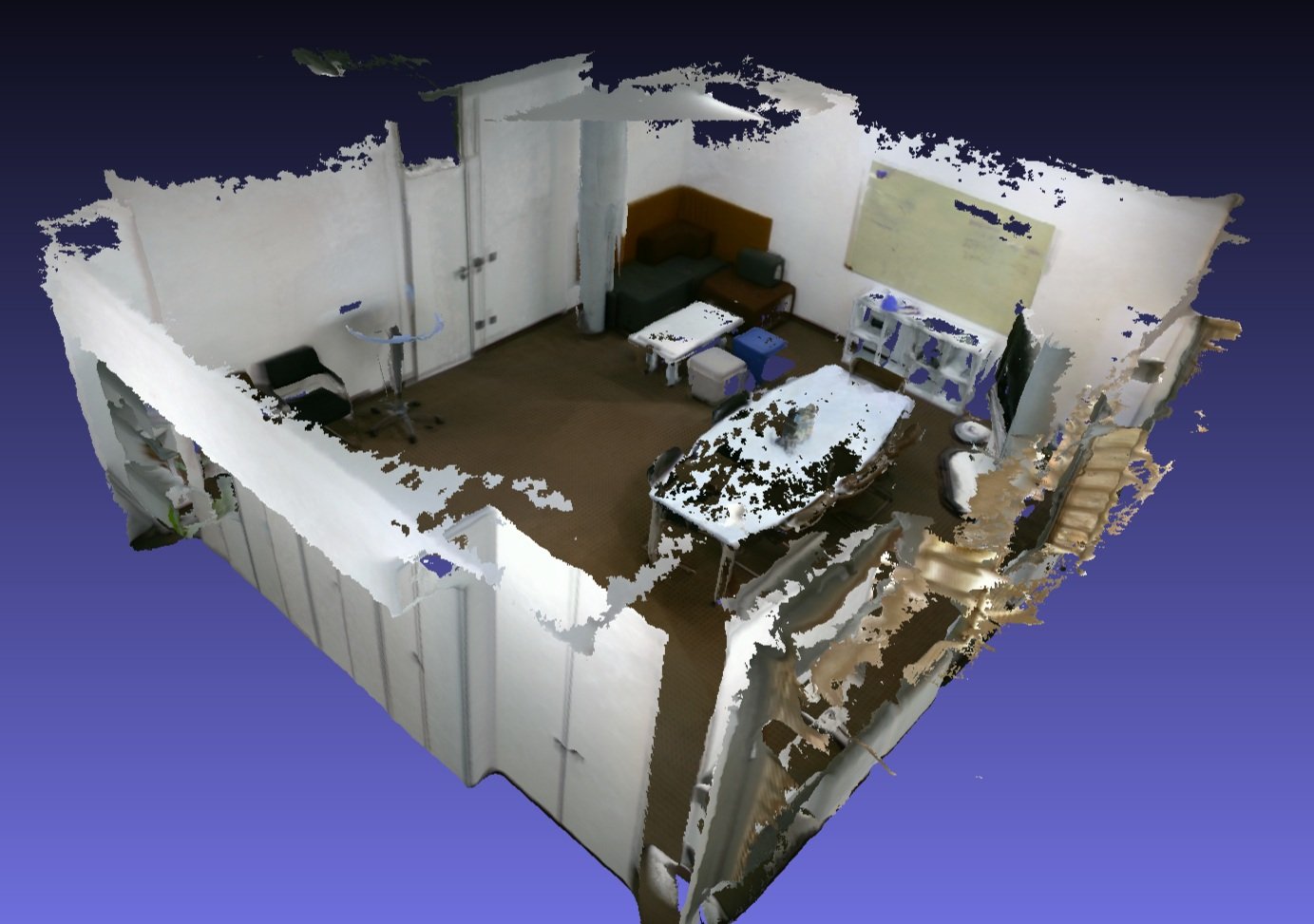

Here is a mash created using the data collected from an Intel RealSense depth camera and Open3D library 4, 5. The processing took 30 minutes for 2 minutes of recording on the CUDA-enabled system 6.

4. Real-time Scene Reconstruction

Almost all of the contemporary techniques in use follow the offline approaches; i.e., data is first collected and processed later to create a 3D model. This limits the quality of the final model as there is no way of verifying that the data collected is sufficient to achieve the desired results. As discussed above, the technologies are also limited due to their reliance on large computational power and the amount of data transfer required in real-time.

The results shown below are captured in real-time with 2 minutes of recording and 3D reconstruction simultaneously.

5. Conclusion

In 2023, we expect to see more advanced and sophisticated indoor 3D reconstruction technologies. For example, the use of machine learning algorithms will likely become more prevalent, fostering more accurate and efficient 3D data processing. Additionally, there will be an increase in the use of photogrammetry, which uses photos and videos to create 3D models, making data collection even more accessible.

However, when it comes to accurately creating a 3D model of any environment, using sensors such as LiDAR or RGB-D in combination with AI techniques is preferred as it combines the benefits of both technologies, providing both colour and depth information for improved accuracy and leveraging the power of AI to improve the quality of 3D models within an automated reconstruction process.

6. References

PolyCam: https://poly.cam/

CamToPlan: https://www.tasmanic.com/

Sayed, M., Gibson, J., Watson, J., Prisacariu, V., Firman, M. and Godard, C., 2022, November. SimpleRecon: 3D reconstruction without 3D convolutions. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXXIII (pp. 1-19). Cham: Springer Nature Switzerland.

Intel Realsense: https://www.intelrealsense.com/

Open3D: http://www.open3d.org/

System configuration for testing: Intel i5-13600, Nvidia 3060, Ubuntu 22.04, CUDA 11.7